Webscraping with RSelenium

Automate your browser actions

LISER

2023-03-17

- Motivation: when do we need RSelenium?

- Get started

- Basic example

- Advanced example

Static and dynamic pages

Static and dynamic pages

The web works with 3 languages:

- HTML: content and structure of the page

- CSS: style of the page

- JavaScript: interactions with the page

Static and dynamic pages

The web works with 3 languages:

HTML: content and structure of the page

CSS: style of the page

JavaScript: interactions with the page

Static vs dynamic

Static webpage:

- all the information is loaded with the page;

- changing a parameter modifies the URL

Examples: Wikipedia, IMDB, elections results from El Pais, etc.

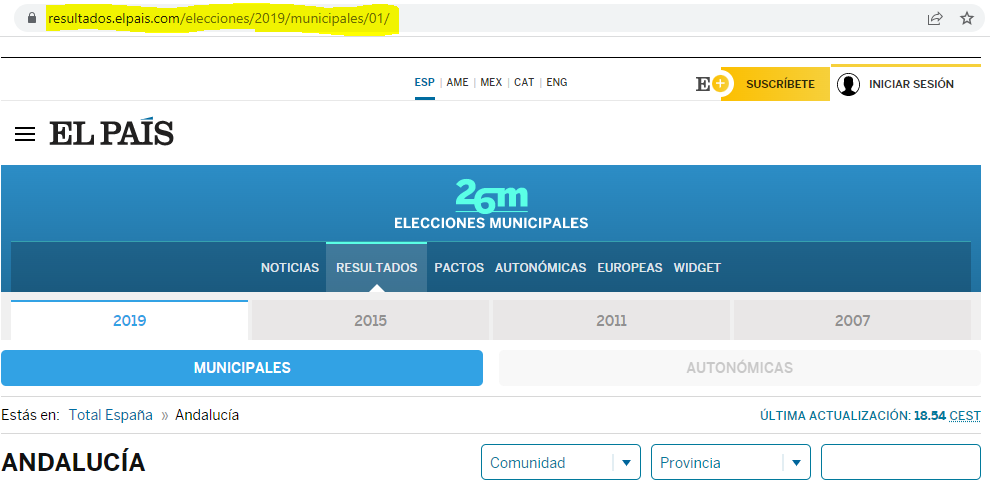

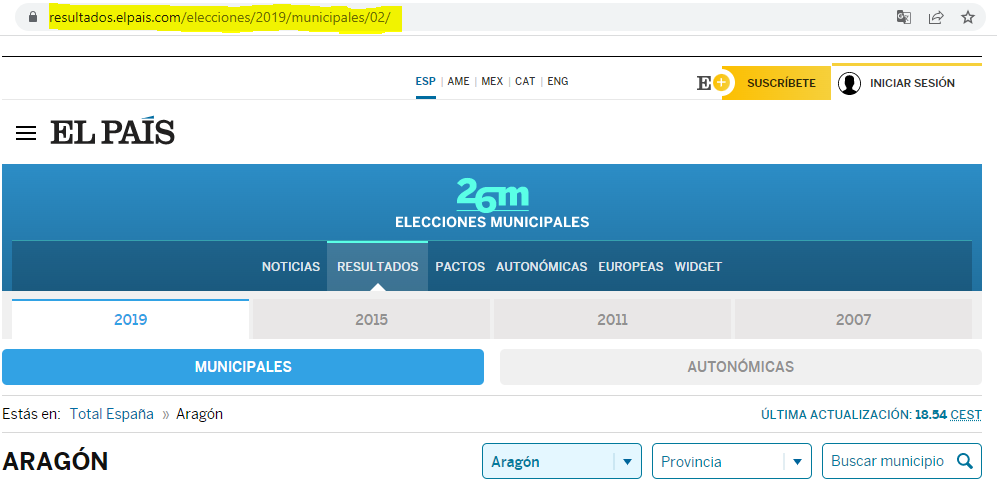

Example: elections results in Spain from the website of El Pais

Static vs dynamic

Dynamic webpage: the website uses JavaScript to fetch data from their server and dynamically update the page.

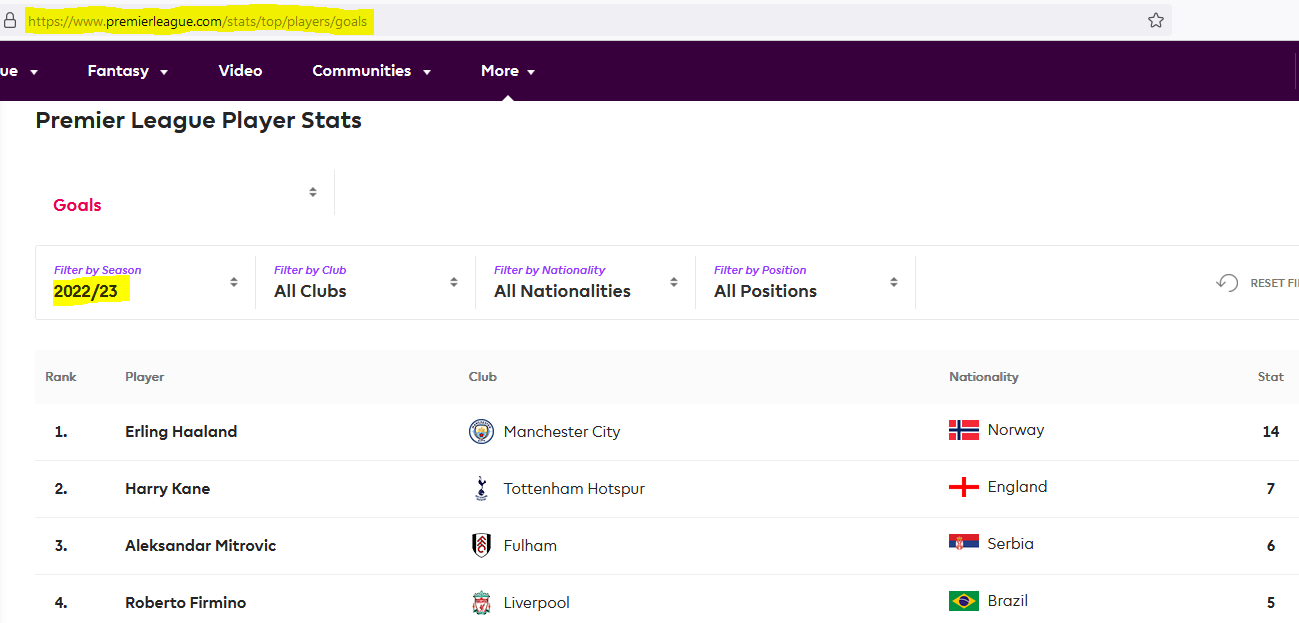

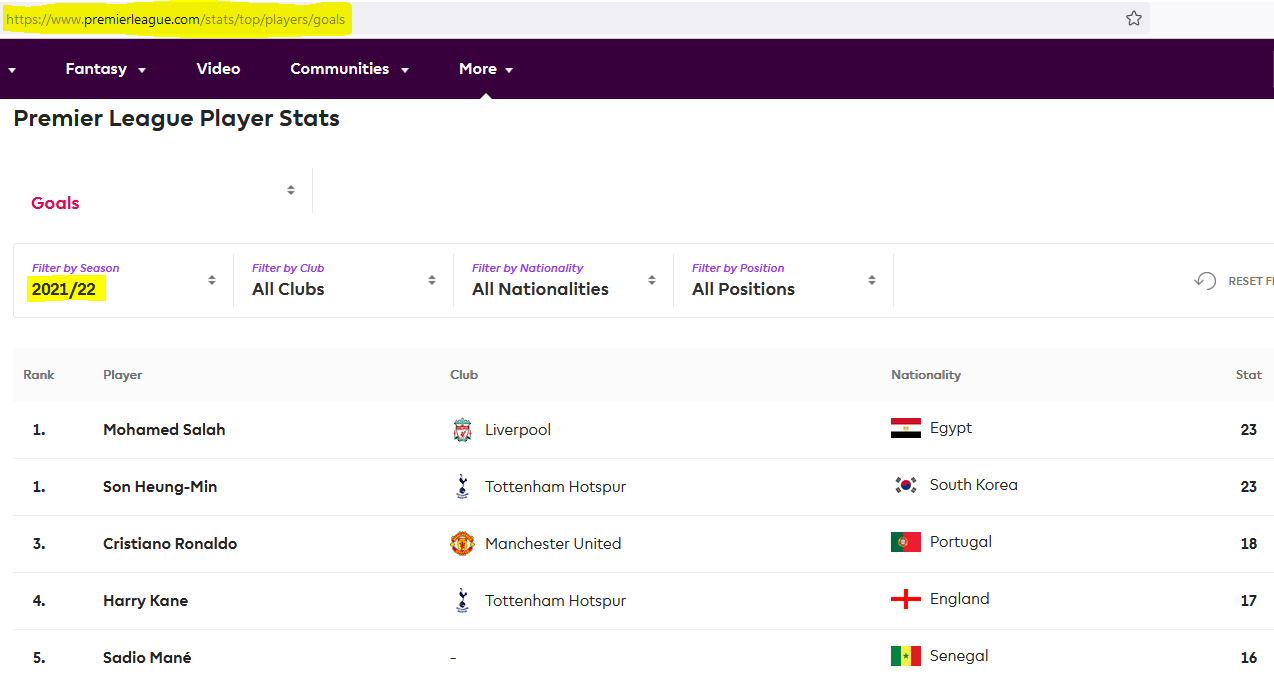

Example: Premier League stats.

Example: Premier League stats

Why is it harder to do webscraping with dynamic pages?

Webscraping a static website can be quite simple:

- you get a list of URLs;

- download the HTML for each of them;

- read and clean the HTML

and that’s it.

This is “easy” because you can identify two pages with different content just by looking at their URL.

In dynamic pages, there’s no obvious way to see that the inputs are different.

So it seems that the only way to get the data is to go manually through all pages.

(R)Selenium

Idea

Idea: control the browser from the command line.

“I wish I could click on this button to open a modal”

Almost everything you can do “by hand” in a browser, you can reproduce with Selenium:

| Action | Code |

|---|---|

| Open a browser | open() / navigate() |

| Click on something | clickElement() |

| Enter values | sendKeysToElement() |

| Go to previous/next page | goBack() / goForward() |

| Refresh the page | refresh() |

| Get all the HTML that is currently displayed |

getPageSource() |

Get started

Get started

To initialize RSelenium, use rsDriver():

If everything works fine, this will print a bunch of messages and open a “marionette browser”.

Get started

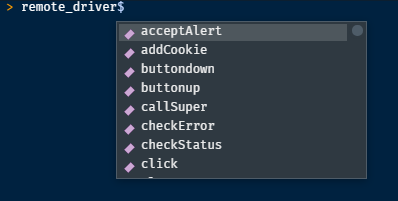

From now on, the main thing is to call <function>() starting with remote_driver$1.

Basic example

Basic example

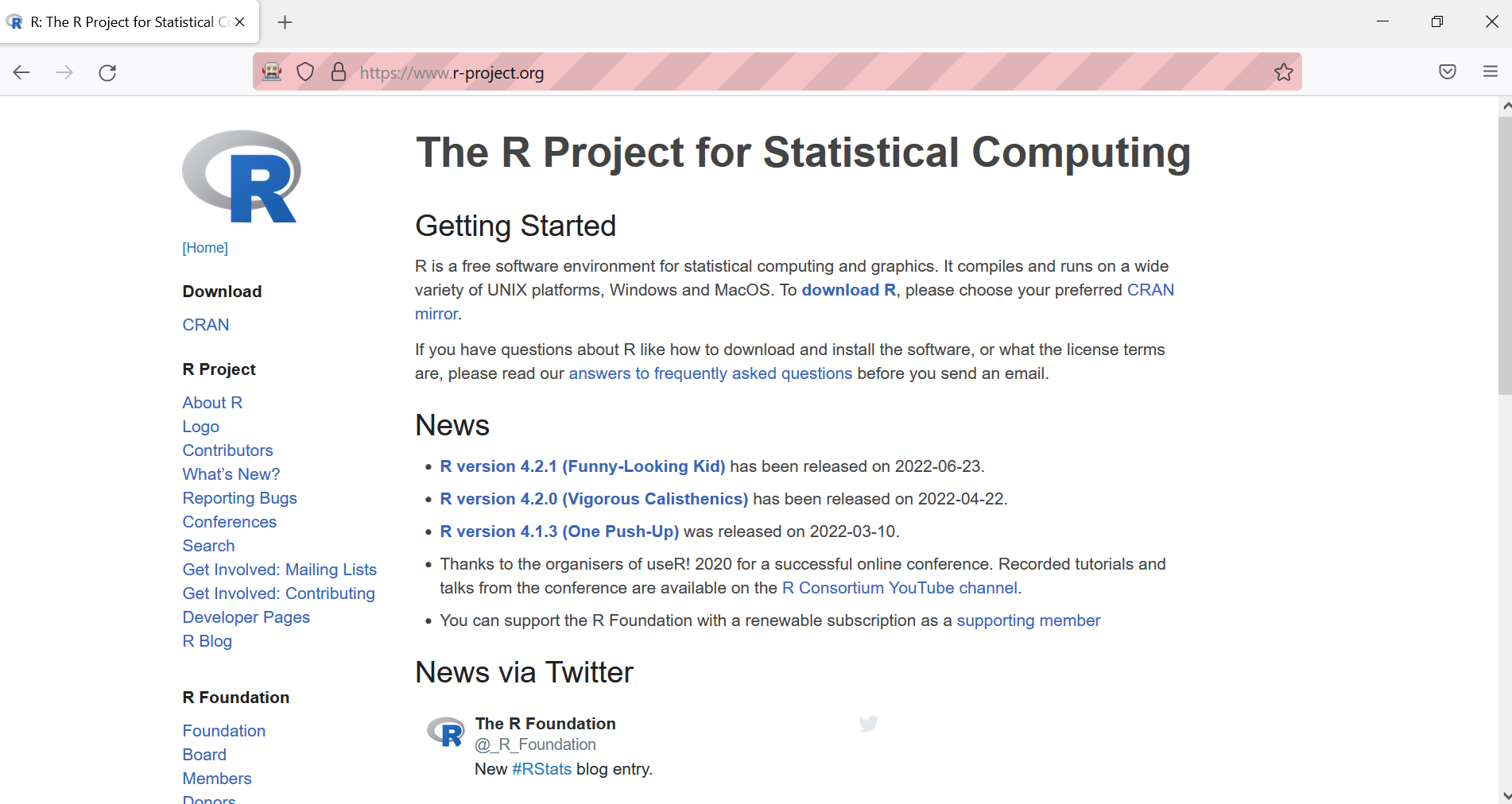

Objective: get the list of core contributors to R located on the R-project website.

How would you do it by hand?

- open the browser;

- go to https://r-project.org;

- in the left sidebar, click on the link “Contributors”;

and voilà!

How can we do these steps programmatically?

Open the browser and navigate

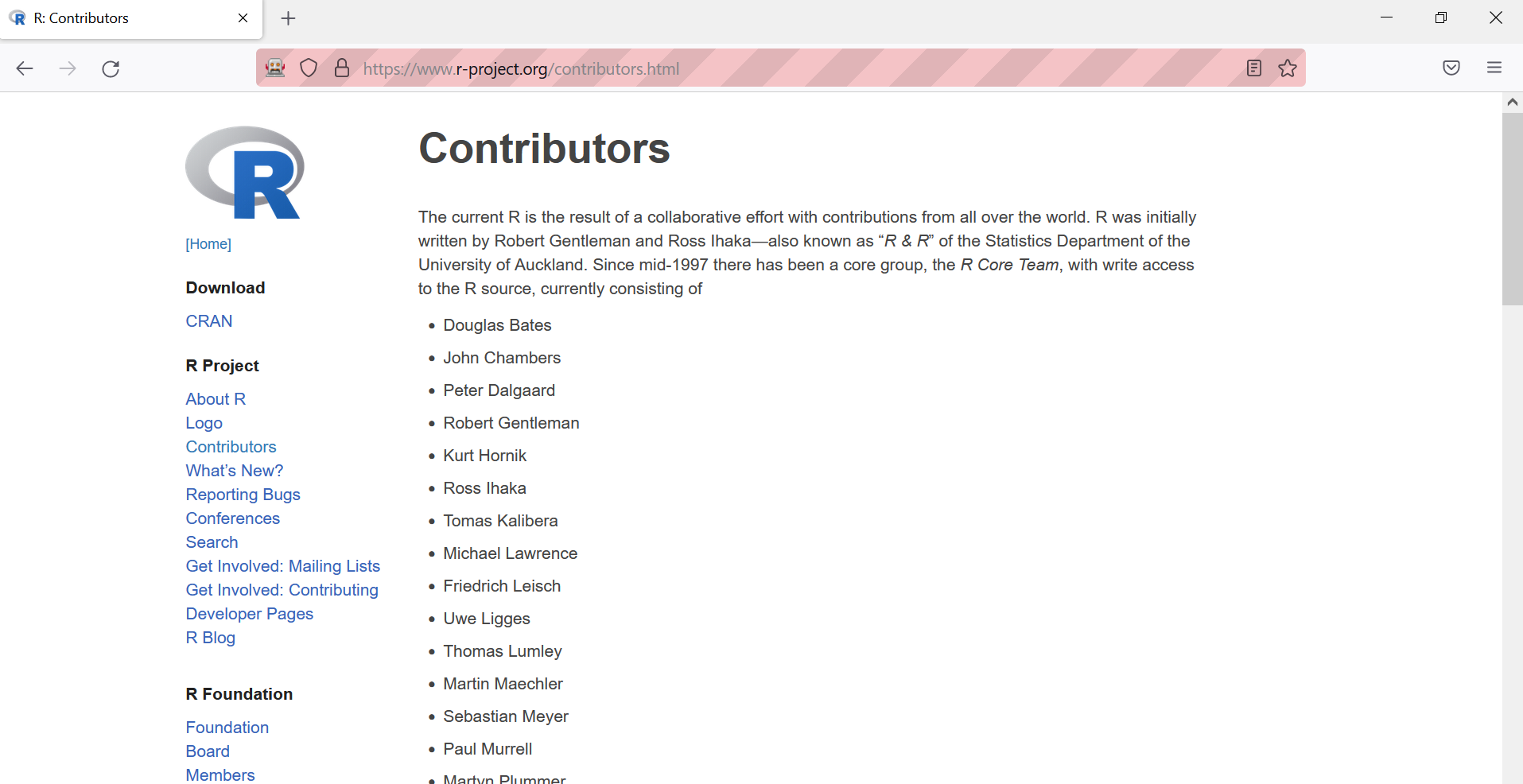

Click on “Contributors”

This requires two things:

- find the element

- click on it

How can we find an element?

- humans -> eyes

- computers -> HTML/CSS

- Find the element

How can we find this link with RSelenium?

-> findElement

- class name ❌

- id ❌

- name ❌

- tag name ❌

- css selector ✔️

- link text ✔️

- partial link text ✔️

- xpath ✔️

- Click it:

We are now on the right page!

Last step: obtain the HTML of the page.

Advanced example

The previous example was not a dynamic page: we could have used the link to the page and apply webscraping methods for static webpages.

Let’s now dive into a more complex example, where RSelenium is the only way to obtain the data.

Example: Sao Paulo immigration museum

Steps:

- Open the website

- Enter “PORTUGUESA” in the input box

- Wait a bit for the page to load

- Open every modal “Ver Mais”

Quick demo

Initialize the remote driver and go to the website:

Quick demo

Fill the field “NACIONALIDADE”:

library(RSelenium)

link <- "http://www.inci.org.br/acervodigital/livros.php"

# Automatically go the website

driver <- rsDriver(browser = c("firefox"), chromever = NULL)

remote_driver <- driver[["client"]]

remote_driver$navigate(link)

# Fill the nationality field and click on "Validate"

remote_driver$

findElement(using = "id", value = "nacionalidade")$

sendKeysToElement(list("PORTUGUESA"))Quick demo

Find the button “Pesquisar” and click it:

library(RSelenium)

link <- "http://www.inci.org.br/acervodigital/livros.php"

# Automatically go the website

driver <- rsDriver(browser = c("firefox"), chromever = NULL)

remote_driver <- driver[["client"]]

remote_driver$navigate(link)

# Fill the nationality field and click on "Validate"

remote_driver$

findElement(using = "id", value = "nacionalidade")$

sendKeysToElement(list("PORTUGUESA"))

# Find the button "Pesquisar" and click it

remote_driver$

findElement(using = 'name', value = "Reset2")$

clickElement()Quick demo

Find the button “Ver Mais” and click it:

library(RSelenium)

link <- "http://www.inci.org.br/acervodigital/livros.php"

# Automatically go the website

driver <- rsDriver(browser = c("firefox"), chromever = NULL)

remote_driver <- driver[["client"]]

remote_driver$navigate(link)

# Fill the nationality field and click on "Validate"

remote_driver$

findElement(using = "id", value = "nacionalidade")$

sendKeysToElement(list("PORTUGUESA"))

# Find the button "Pesquisar" and click it

remote_driver$

findElement(using = 'name', value = "Reset2")$

clickElement()

# Find the button "Ver Mais" and click it

remote_driver$

findElement(using = 'id', value = "link_ver_detalhe")$

clickElement()Quick demo

Get the HTML that is displayed:

library(RSelenium)

link <- "http://www.inci.org.br/acervodigital/livros.php"

# Automatically go the website

driver <- rsDriver(browser = c("firefox"), chromever = NULL)

remote_driver <- driver[["client"]]

remote_driver$navigate(link)

# Fill the nationality field and click on "Validate"

remote_driver$

findElement(using = "id", value = "nacionalidade")$

sendKeysToElement(list("PORTUGUESA"))

# Find the button "Pesquisar" and click it

remote_driver$

findElement(using = 'name', value = "Reset2")$

clickElement()

# Find the button "Ver Mais" and click it

remote_driver$

findElement(using = 'id', value = "link_ver_detalhe")$

clickElement()

# Get the HTML that is displayed in the modal

x <- remote_driver$getPageSource()Quick demo

Exit the modal by pressing “Escape”:

library(RSelenium)

link <- "http://www.inci.org.br/acervodigital/livros.php"

# Automatically go the website

driver <- rsDriver(browser = c("firefox"), chromever = NULL)

remote_driver <- driver[["client"]]

remote_driver$navigate(link)

# Fill the nationality field and click on "Validate"

remote_driver$

findElement(using = "id", value = "nacionalidade")$

sendKeysToElement(list("PORTUGUESA"))

# Find the button "Pesquisar" and click it

remote_driver$

findElement(using = 'name', value = "Reset2")$

clickElement()

# Find the button "Ver Mais" and click it

remote_driver$

findElement(using = 'id', value = "link_ver_detalhe")$

clickElement()

# Get the HTML that is displayed in the modal

x <- remote_driver$getPageSource()

# Exit the modal by pressing "Escape"

remote_driver$

findElement(using = "xpath", value = "/html/body")$

sendKeysToElement(list(key = "escape"))Output

- Chapters

- descriptions off, selected

- captions settings, opens captions settings dialog

- captions off, selected

This is a modal window.

Beginning of dialog window. Escape will cancel and close the window.

End of dialog window.

Next steps

- Do this for all modals

- Once all modals are scraped, go to the next page

- Hope that your code runs smoothly for 2000 pages.

And then what?

If everything goes well, we have collected a lot of .html files.

To clean them, we don’t need RSelenium or an internet connection. These are just text files, they are not “tied” to the website anymore.

Summary

Seleniumin general is a very useful tool but should be used as a last resort:

- APIs, packages

- static webscraping is usually faster

- custom

POSTrequests

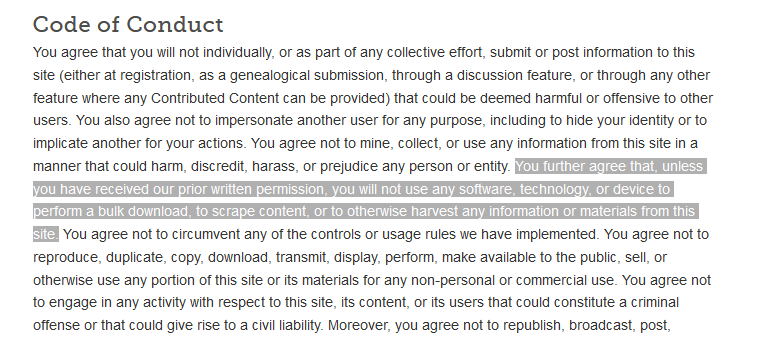

Ethics

Pay attention to a website’s Terms of Use/Service.

Some websites explicitely say that you are not allowed to programmatically access their resources.

Ethics

Be respectful: make the scraping slow enough not to overload the server.

Not every website can handle tens of thousands of requests very quickly.

Tip

For static webscraping, check out the package polite.

Thanks!

More complete presentation (100+ slides):

https://www.rselenium-teaching.etiennebacher.com:

RSeleniuminstallation issues- error handling

- more details in general

Other presentation on some good practices with R:

Appendix

Appendix

For reference, here’s the code to extract the list of contributors:

library(rvest)

html <- read_html("contributors.html")

bullet_points <- html %>%

html_elements(css = "div.col-xs-12 > ul > li") %>%

html_text()

blockquote <- html %>%

html_elements(css = "div.col-xs-12.col-sm-7 > blockquote") %>%

html_text() %>%

strsplit(., split = ", ")

blockquote <- blockquote[[1]] %>%

gsub("\\r|\\n|\\.|and", "", .)

others <- html %>%

html_elements(xpath = "/html/body/div/div[1]/div[2]/p[5]") %>%

html_text() %>%

strsplit(., split = ", ")

others <- others[[1]] %>%

gsub("\\r|\\n|\\.|and", "", .)

all_contributors <- c(bullet_points, blockquote, others)Appendix

[1] "Douglas Bates" "John Chambers" "Peter Dalgaard"

[4] "Robert Gentleman" "Kurt Hornik" "Ross Ihaka"

[7] "Tomas Kalibera" "Michael Lawrence" "Friedrich Leisch"

[10] "Uwe Ligges" "Thomas Lumley" "Martin Maechler"

[13] "Sebastian Meyer" "Paul Murrell" "Martyn Plummer"

[16] "Brian Ripley" "Deepayan Sarkar" "Duncan Temple Lang"

[19] "Luke Tierney" "Simon Urbanek" "Valerio Aimale"

[22] "Suharto Anggono" "Thomas Baier" "Gabe Becker"

[25] "Henrik Bengtsson" "Roger Biv" "Ben Bolker"

[28] "David Brahm" "Göran Broström" "Patrick Burns"

[31] "Vince Carey" "Saikat DebRoy" "Matt Dowle"

[34] "Brian D’Urso" "Lyndon Drake" "Dirk Eddelbuettel"

[37] "Claus Ekstrom" "Sebastian Fischmeister" "John Fox"

[40] "Paul Gilbert" "Yu Gong" "Gabor Grothendieck"

[43] "Frank E Harrell Jr" "Peter M Haverty" "Torsten Hothorn"

[46] "Robert King" "Kjetil Kjernsmo" "Roger Koenker"

[49] "Philippe Lambert" "Jan de Leeuw" "Jim Lindsey"

[52] "Patrick Lindsey" "Catherine Loader" "Gordon Maclean"

[55] "Arni Magnusson" "John Maindonald" "David Meyer"

[58] "Ei-ji Nakama" "Jens Oehlschägel" "Steve Oncley"

[61] "Richard O’Keefe" "Hubert Palme" "Roger D Peng"

[64] "José C Pinheiro" "Tony Plate" "Anthony Rossini"

[67] "Jonathan Rougier" "Petr Savicky" "Günther Sawitzki"

[70] "Marc Schwartz" "Arun Srinivasan" "Detlef Steuer"

[73] "Bill Simpson" "Gordon Smyth" "Adrian Trapletti"

[76] "Terry Therneau" "Rolf Turner" "Bill Venables"

[79] "Gregory R Warnes" "Andreas Weingessel" "Morten Welinder"

[82] "James Wettenhall" "Simon Wood" " Achim Zeileis"

[85] "J D Beasley" "David J Best" "Richard Brent"

[88] "Kevin Buhr" "Michael A Covington" "Bill Clevel"

[91] "Robert Clevel," "G W Cran" "C G Ding"

[94] "Ulrich Drepper" "Paul Eggert" "J O Evans"

[97] "David M Gay" "H Frick" "G W Hill"

[100] "Richard H Jones" "Eric Grosse" "Shelby Haberman"

[103] "Bruno Haible" "John Hartigan" "Andrew Harvey"

[106] "Trevor Hastie" "Min Long Lam" "George Marsaglia"

[109] "K J Martin" "Gordon Matzigkeit" "C R Mckenzie"

[112] "Jean McRae" "Cyrus Mehta" "Fionn Murtagh"

[115] "John C Nash" "Finbarr O’Sullivan" "R E Odeh"

[118] "William Patefield" "Nitin Patel" "Alan Richardson"

[121] "D E Roberts" "Patrick Royston" "Russell Lenth"

[124] "Ming-Jen Shyu" "Richard C Singleton" "S G Springer"

[127] "Supoj Sutanthavibul" "Irma Terpenning" "G E Thomas"

[130] "Rob Tibshirani" "Wai Wan Tsang" "Berwin Turlach"

[133] "Gary V Vaughan" "Michael Wichura" "Jingbo Wang"

[136] "M A Wong" Session information

─ Session info ───────────────────────────────────────────────────────────────

setting value

version R version 4.2.2 (2022-10-31 ucrt)

os Windows 10 x64 (build 19044)

system x86_64, mingw32

ui RTerm

language (EN)

collate English_Europe.utf8

ctype English_Europe.utf8

tz Europe/Paris

date 2023-03-17

pandoc 3.1 @ C:/Users/etienne/AppData/Local/Pandoc/ (via rmarkdown)

─ Packages ───────────────────────────────────────────────────────────────────

package * version date (UTC) lib source

cli 3.6.0 2023-01-09 [1] CRAN (R 4.2.2)

digest 0.6.31 2022-12-11 [1] CRAN (R 4.2.2)

evaluate 0.20 2023-01-17 [1] CRAN (R 4.2.2)

fastmap 1.1.1 2023-02-24 [1] CRAN (R 4.2.2)

glue 1.6.2 2022-02-24 [1] CRAN (R 4.2.0)

htmltools 0.5.4 2022-12-07 [1] CRAN (R 4.2.2)

httr 1.4.5 2023-02-24 [1] CRAN (R 4.2.2)

jsonlite 1.8.4 2022-12-06 [1] CRAN (R 4.2.2)

knitr 1.42 2023-01-25 [1] CRAN (R 4.2.2)

lifecycle 1.0.3 2022-10-07 [1] CRAN (R 4.2.1)

magrittr 2.0.3 2022-03-30 [1] CRAN (R 4.2.0)

R6 2.5.1 2021-08-19 [1] CRAN (R 4.2.0)

rlang 1.1.0.9000 2023-03-15 [1] Github (r-lib/rlang@9b45027)

rmarkdown 2.20 2023-01-19 [1] CRAN (R 4.2.2)

rstudioapi 0.14 2022-08-22 [1] CRAN (R 4.2.1)

rvest * 1.0.3 2022-08-19 [1] CRAN (R 4.2.1)

selectr 0.4-2 2019-11-20 [1] CRAN (R 4.2.0)

sessioninfo 1.2.2 2021-12-06 [1] CRAN (R 4.2.0)

stringi 1.7.12 2023-01-11 [1] CRAN (R 4.2.2)

stringr 1.5.0 2022-12-02 [1] CRAN (R 4.2.2)

vctrs 0.5.2.9000 2023-03-15 [1] Github (r-lib/vctrs@0dea6ca)

xfun 0.37 2023-01-31 [1] CRAN (R 4.2.2)

xml2 1.3.3 2021-11-30 [1] CRAN (R 4.2.0)

yaml 2.3.7 2023-01-23 [1] CRAN (R 4.2.2)

[1] C:/Users/etienne/AppData/Local/R/win-library/4.2

[2] C:/R/library

──────────────────────────────────────────────────────────────────────────────